OpenAI has taken the wraps off Sora, its latest AI-driven content creation model that can create stupidly high-quality content from text prompts alone.

There’s a joke doing the rounds that you might have heard: I didn’t sign up for a future where humans stack shelves and clean toilets while the robots make video and music. I’ve never been sure whether it’s funny or not, and even more so this morning in the wake of OpenAI’s announcement of its text-to-video model, Sora.

It is, of course, not the first AI text-to-video engine. Google, with Lumiere, and Stability AI both have shown off their own models in recent months. But thanks to the ubiquitous ChatGPT, OpenAI has something of a market leadership in this field, and so this is probably going to have more impact.

A quick gif showing what Sora can do. Full 60-second video at the end

It also seems to have hit a sweet spot and made a big splash for Sora at a time when the tech is producing some frighteningly realistic content. The difference there can be a matter of weeks as the development cycles shrink down from years to months and are now shorter. You know, this isn’t January 2024 any more, people; it’s February 2024. Get with the program!

OpenAI has launched Sora with a whole slew of different videos designed to show off its capabilities. An old-school Landrover knockoff is trailed along a dirt road in a following drone shot, mammoths charge at the camera, a woman blinks in extreme close-up…and so on.

One of the few clues that this is not a conventional shot is the flubbing of the copyrighted name 'Landrover'

The video above was generated from the following prompt: “ The camera follows behind a white vintage SUV with a black roof rack as it speeds up a steep dirt road surrounded by pine trees on a steep mountain slope, dust kicks up from it’s tires, the sunlight shines on the SUV as it speeds along the dirt road, casting a warm glow over the scene. The dirt road curves gently into the distance, with no other cars or vehicles in sight. The trees on either side of the road are redwoods, with patches of greenery scattered throughout. The car is seen from the rear following the curve with ease, making it seem as if it is on a rugged drive through the rugged terrain. The dirt road itself is surrounded by steep hills and mountains, with a clear blue sky above with wispy clouds.”

There is the odd AI tell that makes some of them jar slightly. “The current model has weaknesses,” admits OpenAI, saying that it might have trouble simulating the physics of a complex scene and may not understand specific instances of cause and effect. “For example, a person might take a bite out of a cookie, but afterwards, the cookie may not have a bite mark.”

This is impressive, Sora not only generating the view but also the detailed reflections that change with the lighting

But the capability is definitely there in spades. Sora is a diffusion model which generates a video by starting with one that looks like static noise and gradually transforms it by removing the noise over many steps.

It is capable of generating entire videos all at once or extending generated videos to make them longer. By giving the model foresight of many frames at a time, the OpenAI team has solved the challenging problem of making sure a subject stays the same even when it goes out of view temporarily (something that has tripped up many AI animations in the past). It can also take an existing still image and generate a video from it, or take an existing video and extend it or fill in missing frames. It works in Full HD, and there’s a technical report that has more info for the curious.

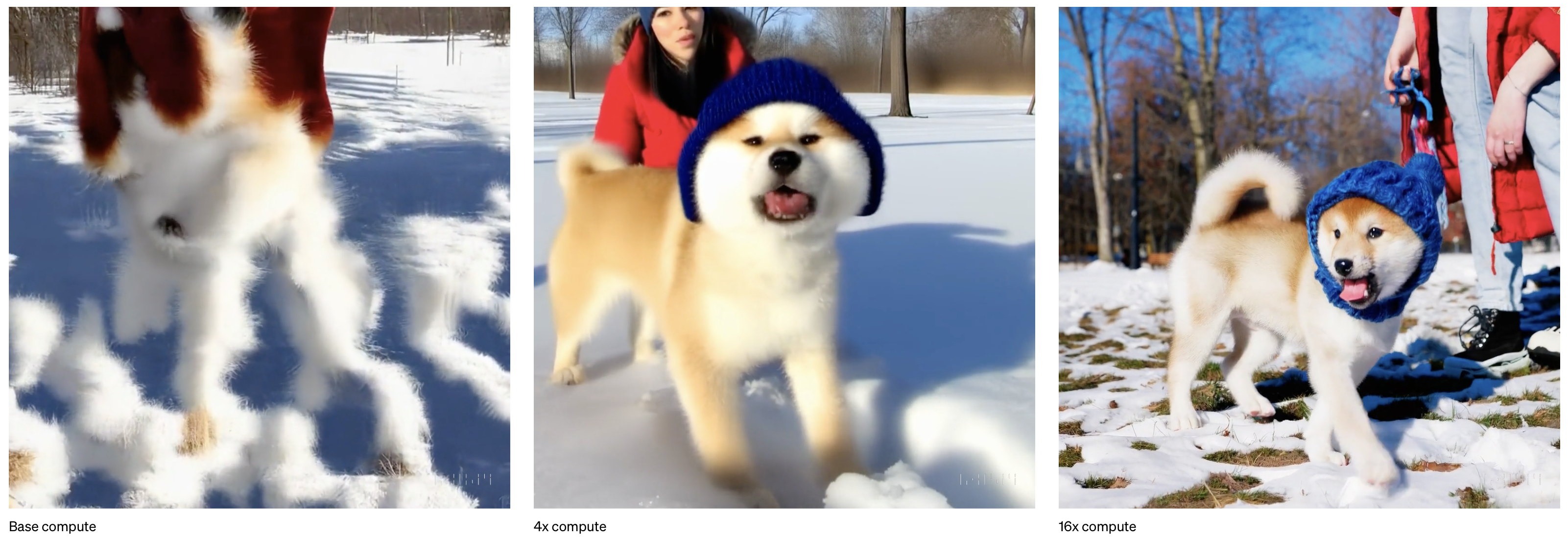

How throwing more computer resources at a video evolves it dramatically

OpenAI says, that for now anyway, Sora is becoming available to red teamers — domain experts in areas like misinformation, hateful content, and bias — who will be adversarially testing the model to assess critical areas for harms or risks.

The company also says that it is also building tools to help detect misleading content, such as a detection classifier that can tell when Sora generated a video and plans to include C2PA metadata in the future if the model is deployed in an OpenAI product. With a whole host of elections taking place around the world this year, that stuff really needs to be baked in from the start too.

“We are also granting access to a number of visual artists, designers, and filmmakers to gain feedback on how to advance the model to be most helpful for creative professionals,” the company says.

Did someone say, ‘Delete it and fire all the research materials into the heart of the sun’? Shhh... at the back.

Tags: Technology AI

Comments