The powers that be have realised the potential abuse of AI systems. Is this the beginning of the beginning, or the beginning of the end?

AI got some big headlines at the end of 2022 thanks to ChatGPT, but some significant advantages were made earlier on in the year with the release of a number of AI image generators. Yet, despite their success, some chickens have begun to come home to roost, and some in power are concerned. Likewise, these image generators have also ruffled even more feathers among stock image sites.

To begin with, Getty Images is suing the creators of Stable Diffusion for copyright violations. It turns out that if you train your AI image generation software, you need to feed it rather a lot of different pictures. And yes, you've guessed it, cyber eyes have been sifting through one of the most famous stock image collections on the planet, and it isn't a happy bunny.

Getty Images stated in a press release, "It is Getty Images’ position that Stability AI unlawfully copied and processed millions of images protected by copyright and the associated metadata owned or represented by Getty Images absent a license to benefit Stability AI’s commercial interests and to the detriment of the content creators.

"Getty Images believes artificial intelligence has the potential to stimulate creative endeavors. Accordingly, Getty Images provided licenses to leading technology innovators for purposes related to training artificial intelligence systems in a manner that respects personal and intellectual property rights. Stability AI did not seek any such license from Getty Images and instead, we believe, chose to ignore viable licensing options and long‑standing legal protections in pursuit of their stand‑alone commercial interests."

AI needs lots of source material

Stable Diffusion was trained using over 2.3bn images, and it turns out that, thanks to the wonders of open source software, we can see roughly the percentage of how many images from the Getty library were used in the process.

Waxy took a sample of 12 million of the images used, and found 15,000 of them had come from the Getty library. This is, of course, a rather small percentage of the full 2.3bn images used, so we don't know what the true total is, but what is clear is that the open source nature of the data means Getty can prove its library was potentially used unlawfully.

An additional lawsuit has been launched by a group of artists against Stable Diffusion and Midjourney, again for copyright infringement. Although this case goes a bit further than the Getty lawsuit in that it makes a further allegation that the AI is 'remixing' content without permission. A statement made by the group says that the AI image generators are, “a 21st-century collage tool that remixes the copyright works of millions of artists whose work was used as training data.”

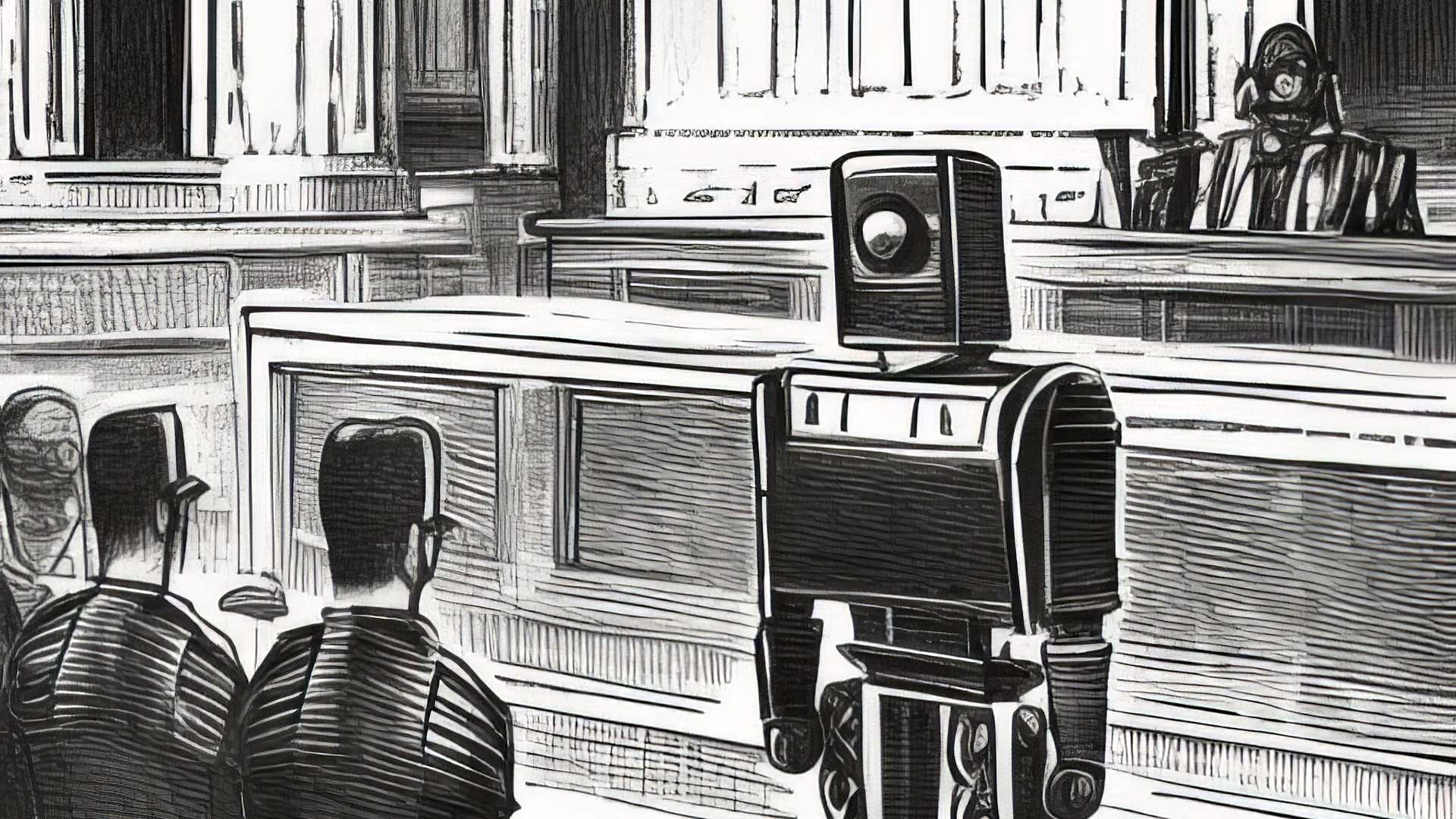

That latter part is where things could become unstuck for the plaintiffs, because AI does not generally remix things, although this could be a tricky thing to explain to a judge. Tech lawsuits like this are fraught with potential problems, simply because it all has to be explained to, and understood by, a judge. We all know how a certain lawsuit by one big tech firm against one big camera firm went a few years back. And much of that decision hinged on the tech firm, Apple, perhaps not explaining things as thoroughly as it could have. When even the creators of some AI systems are not sure how their own creation is working, you can see the problems that are created.

The EU is bringing in AI regulations

Potential lawsuits are one thing, but another potential spanner has been thrown in by the EU, which wants to nip AI regulation in the bud before things become too much of a Wild West.

As noted by Petapixel, the AI Act is the first law of its kind amongst significant regulators in the world. The new law seeks to make things a lot more transparent, requiring that companies are open about their AI training sources. Once enacted, the law will require that all companies that sell AI products within the EU comply or face being fined 6% of their worldwide turnover.

Not surprisingly this has caused a lot of consternation amongst developers and startups, who believe that such a law will reduce competitiveness. And it's not the only angle of attack on the new technology either. Numerous bans have been enacted by organisations as diverse as conferences and educational establishments on AI content. And China has come at this from a concern about deepfakes angle, and already issued regulations that prohibit the creation of any AI-generated media without their being a clear label (watermarking, for instance) indicating precisely what they are.

As a consumer of these new technologies, it's hard to argue with the EU's sentiment. AI development has the potential to get out of control very quickly, and with governments often playing catchup to such things, it's actually refreshing to see a regulatory body recognising the risks early on. Whether it will truly reduce competitiveness remains to be seen, but in all likelihood, such protests will fade into history.

Tags: Technology AI

Comments