It's easy to talk glibly about how the metaverse is an interconnected nexus of 3D worlds without asking some pretty fundamental questions. One of these is "who's going to make the metaverse".

You don't have to think about it very much to see the problem. As long as we imagine that all you need to make a metaverse is a bunch of computer games, it doesn't seem all that difficult. But that doesn't work for a true metaverse. Why? Because even if you added all the games in existence and connected them seamlessly, you still wouldn't have a viable virtual world, never mind a universe; and never mind several universes.

So it's time for a (virtual) reality check. And that reality is that a true metaverse - one with no boundaries - is a thing of infinite complexity, not just in a physical sense, but in the sense of interactions and outcomes. So the problem boils down to this: how do we design something infinitely complex with finite tools.

It's not impossible, although you could argue that it's improbable. A bit like evolution, say. Evolution leads to absolutely staggering complexity and results in seemingly impossibly complicated biological machines. Us, for example. The only tool at evolution's disposal is natural selection. But it's a pretty powerful one because it applies in all circumstances, and because there's an infinite number of circumstances, there's at least the possibility of an endless number of life forms.

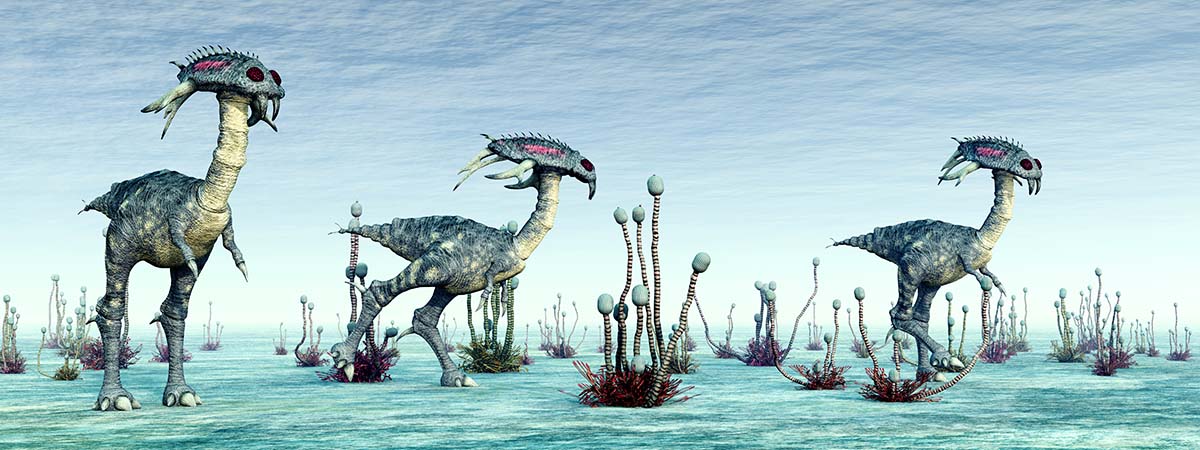

Computers can't be trusted to generate useful evolution on their own. Image: Shutterstock.

Evolution

So maybe that's what we need for the metaverse: evolution. After all, computers are well known for speeding things up.

I don't doubt that some form of evolution will play a part in the metaverse. It would be hard for it not to, especially in goal-seeking contexts. But even a hyper-accelerated evolution courtesy of the most powerful computing resources on the planet isn't the complete answer. Here's at least one reason why.

Have you ever seen those "game of life" computer programs that are supposed to simulate evolution? The earliest one was written by John Horton Conway in 1970 - so it certainly isn't a new idea. It's rather basic, as you'd expect. More recent examples have undertaken complicated conceptual tasks, like inventing a multi-legged animal and empowering it to climb over obstacles.

It's fascinating to watch, but there's one massive problem, which is that programs like this don't invent creatures that look like horses, giraffes or gorillas. Far from it. You're far more likely to see results that are just plain weird. Like a seven-legged... thing that walks with five legs, waving the sixth frantically in the air to counterbalance the lack of symmetry, while the seventh drags along the ground doing absolutely nothing useful at all.

Natural evolution went through a phase like that. It was called the Cambrian period, and it was characterised by an explosion of weird and sometimes wonderful life forms. Unfortunately, few of their descendants exist today because they were so odd and, well, speculative, that they just weren't destined for this world. But that extraordinary variety probably seeded many of the innovations that are fundamental to today's creatures.

So, because of the trend towards oddness in the short term, we can't rely on artificial evolution to give us a convincing metaverse. Instead, we need more organisation and purpose than that.

Getting organised

So, yes, that implies human input and - and some level - supervision. None of which solves the problem of where all the detail in this all-embracing, immersive virtual world will come from. And remember - we're not just talking about appearances here. Every object in the metaverse will have to contain and share its own data. That's data about the physics of the object - texture, softness, rigidity, elasticity: any number of physical characteristics that will need to be able to interact with other things.

In the end, I think the metaverse will have to be able to generate itself, not chaotically, but according to rules. Because if it's genuinely going to have no limits (and if it has limits, then it's not the metaverse), then it's going to have to be self-generative.

While that may seem a bit far fetched, I would say that it's well within reach. Games engines (Unity, Unreal, for example) and other 3D world frameworks like Unity can give us realism and real-time visualisation to an extremely high level of credibility. But none of this will provide us with a metaverse if humans have to design everything.

I'm always staggered at the quality of work from games company studios. The amount of talent, vision and gritty perseverance that goes into a modern computer game is inspiring. It sometimes seems like there's nothing the top games studios can't do. Within a game, and within finite boundaries, I've no doubt that's true. But what they can't do, and never will, is make an infinite virtual world. It's hard enough making a single game. But what happens when someone goes "off-piste" in a virtual world and "breaks the frame", so to speak. Today, the answer is nothing. Because there's nothing there. You'll fall off the edge of the world.

But what if we can distil the essence of a 3D world to a set of procedural rules? Like the rules for designing a city? You could teach a generative metaverse program what a city is "like". What a forest is "like" or what an alien planet is "like".

I use the word "like" with precision here because that is one way to hint at the essence of a conscious or sentient experience. To have an experience of something is to know what it is "like" to have that experience.

This metropolis in The Matrix Awakens is entirely procedurally generated. Image: Unreal Engine.

If we can distil that "essence of the experience" into a set of rules, we can generate fully authentic experiences. Will it ever happen? I'd argue we're at least in the foothills of that mountain range. Remember the trailer for The Matrix Awakens? It's a breathtakingly detailed romp, through a fictional but utterly convincing cityscape, where even the most minor details on the furthest buildings aren't just textures but complex 3D artefacts.

It was made with Unreal Engine, using procedural generation, and is an astonishing achievement that goes way beyond what would be possible with previous techniques. But that's just the start.

What we'll see next, and we'll definitely need this, is a full-blown AI engine at the heart of metaverse creation and visualisation. One that understands what a multiplicity of human experiences of the world are "like".

If we can get this right - and control and manage it without diminishing the quality of the experience - then we're in for a hell of a ride.

Comments