If you're wondering why the graphics in your favourite childhood video game look terrible on modern displays, here's your explanation.

If you're lucky enough to have a twenty-year-old CRT-based video monitor stashed somewhere, you might already know how much money they go for on eBay. Collection only, of course, because they weigh half a Jupiter, but there's a certain subset of humanity that really, really likes a Sony BVM-series display and is prepared to pay good money for one: retro computing enthusiasts.

There are a number of reasons why your old Sega looks better on a display of similar vintage.

Many early computers existed in a hybrid state of digital and analogue technology. Internally, almost all considered graphics in terms of pixels, but there was no practical way of sending that video data out of the computer at a high enough speed to create a video display. All the machine could do is transmit the values of its pixels as voltage levels, as they were calculated, creating an analogue video signal.

Modern flat panel displays really need digital data, but in analogue video, while we can easily identify rows of pixels, two horizontally adjacent pixels aren't separated in any electronic sense, given they might be the same colour. A modern monitor aiming to display this signal can digitally sample each row and record the voltage levels, but there's no guarantee (and not much likelihood) that those samples will line up with the original pixels.

If you don't remember Sonic's graphics being as blocky as this, it could be down to the way monitors used to work.

Display smear

(There's a side issue to investigate here. Analogue signals can be smeary, and because of the line structure it smears pictures horizontally, but not vertically. Some game designers leveraged that fact by using alternating pixel colour patterns - dithering - to simulate more colours than the hardware could actually display, on the assumption that the smear would mix the colours. Even in an ideal world, if a modern display were to precisely recover all the pixels, these dither patterns can become inappropriately visible; it's valid to consider applying deliberate blur.)

Composite colour encoding is a factor we'll skim here as not all retro technology relies on it. Still, even with nice clean RGB signals, the only way a display can identify individual pixels in each row is to ensure it's sampling each one at exactly the right time as the signal arrives. That's what a modern monitor or projector tries to do when you apply a VGA signal and select the "auto adjust" menu option. It looks for sharp edges in the signal and, knowing how many pixels there are per line, calibrates its timing to identify each one with pinpoint accuracy.

It works best if you apply a signal with alternating columns of pixels set to two different colours (red and blue, say) so that the monitor has a near-perfect timing reference; the red and blue signals just look like square waves, neatly defining the first and last pixels and every pixel inbetween.

That works well with VGA signals, which have timing properties identifying how many pixels there should be per line (and even that fails in some specific situations). There isn't any widely-used solution, however, to sampling a standard-definition video signal generated by a Commodore 64, with its 320 by 200-pixel resolution, or a Super Nintendo Entertainment System at anywhere betwen 256 by 244 and 512 by 478.

What usually happens is that the modern display will sample the entire legal active picture area of the incoming standard-definition video signal at what the designer thought was enough resolution to capture all the available picture detail. Again, the dots may not line up, risking moire, softness, awkward black borders and perhaps missing some pixels entirely, perhaps making text illegible.

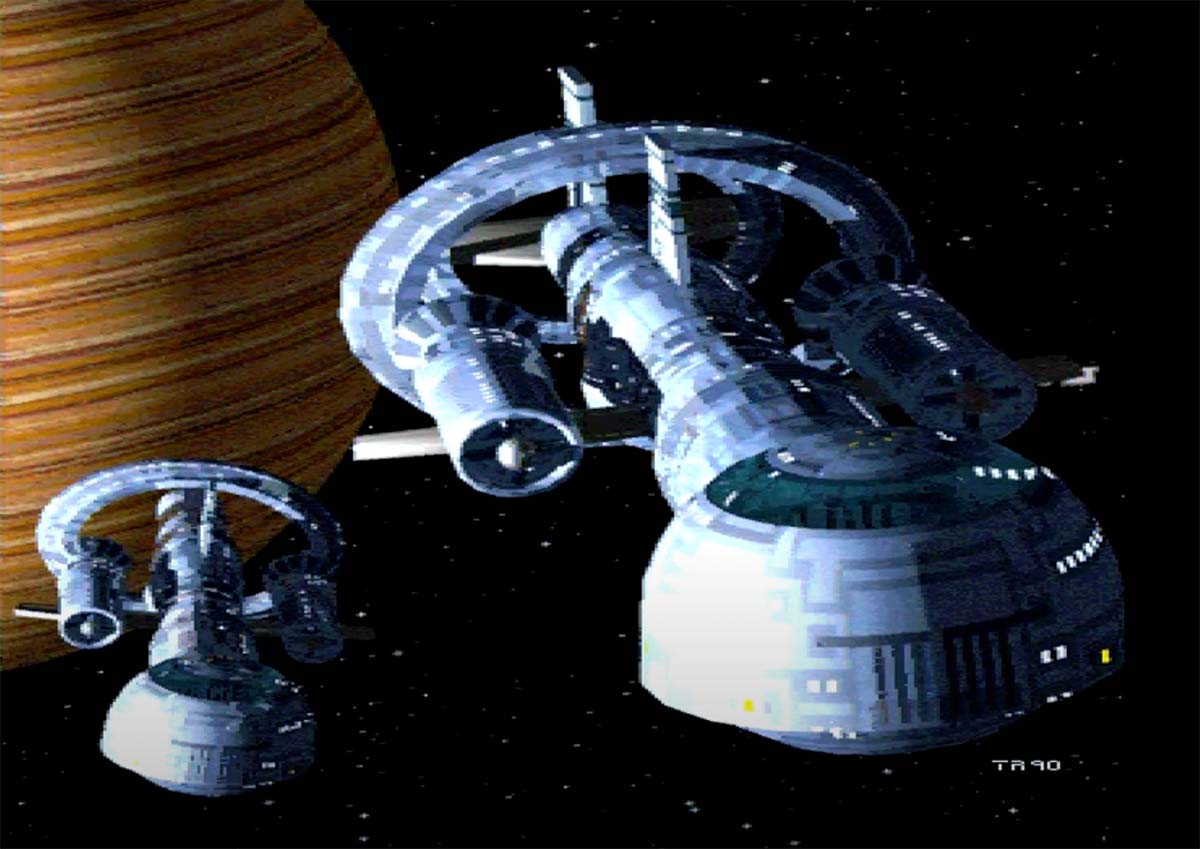

Images such as this, by Amiga artist Tobias Richter, looked photo real to us back in the day!

Display latency

The second problem is that all this processing takes time. An analogue CRT monitor has no way to store the image because it doesn't need to; the signal arrives at the input connector and is sent, via some analogue electronics withvanishingly small latency, to the electron guns. Sampling a full frame is likely to delay things at least a full frame, and it might take another frame for other processing before it's displayed. So, take heart: if Sonic the Hedgehog seems harder than it was, your reactions may not have dulled since childhood. It's just that Sega designed the game around blindingly fast display technology.

The third problem is that the resolution of that captured image probably isn't anything like the resolution of the modern display's flat panel.

One of the advantages of a CRT monitor is that it can handle a range of resolutions. Ask it to scan 320 lines per frame, and it draws 320 horizontal lines across the display with its electron guns. Ask for 1200 lines per frame, and it will draw 1200 lines per frame. Ask for any of a variety of frame rates and it'll happily redraw the frame at that rate. Many flat panel displays operate over a much more limited range of frame rates, and of course they have just one, fixed resolution. The concept of interlaced scan is also meaningless to an LCD or OLED.

Effectively all flat panel displays have hardware in them that's designed to solve these problems - a scaler. Most of them apply a reasonably quick scaling algorithm designed to minimise the visibility of quantisation noise (pixelation), leaving an image that's a far cry from the crisply precision-placed horizontal scanlines of a CRT display.

In theory, it would be possible to allow users to make precision adjustments of the way a modern monitor samples a standard-definition signal. It would then be possible for the monitor to obtain a good record of exactly what the value of each pixel should be. Then, a more appropriate scaling algorithm could be used to fill the screen with a precision representation of each of the retro machine's pixels, with options to simulate interlace, horizontal blur, and other niceties.

It would be a fairly minor piece of programming to anyone experienced with display firmware, and might create something saleable as a retro gaming display. So far, nobody has ever done it.

Tags: Technology Retro

Comments