Rakesh Malik analyses where Nvidia is heading with all things AI and Omniverse.

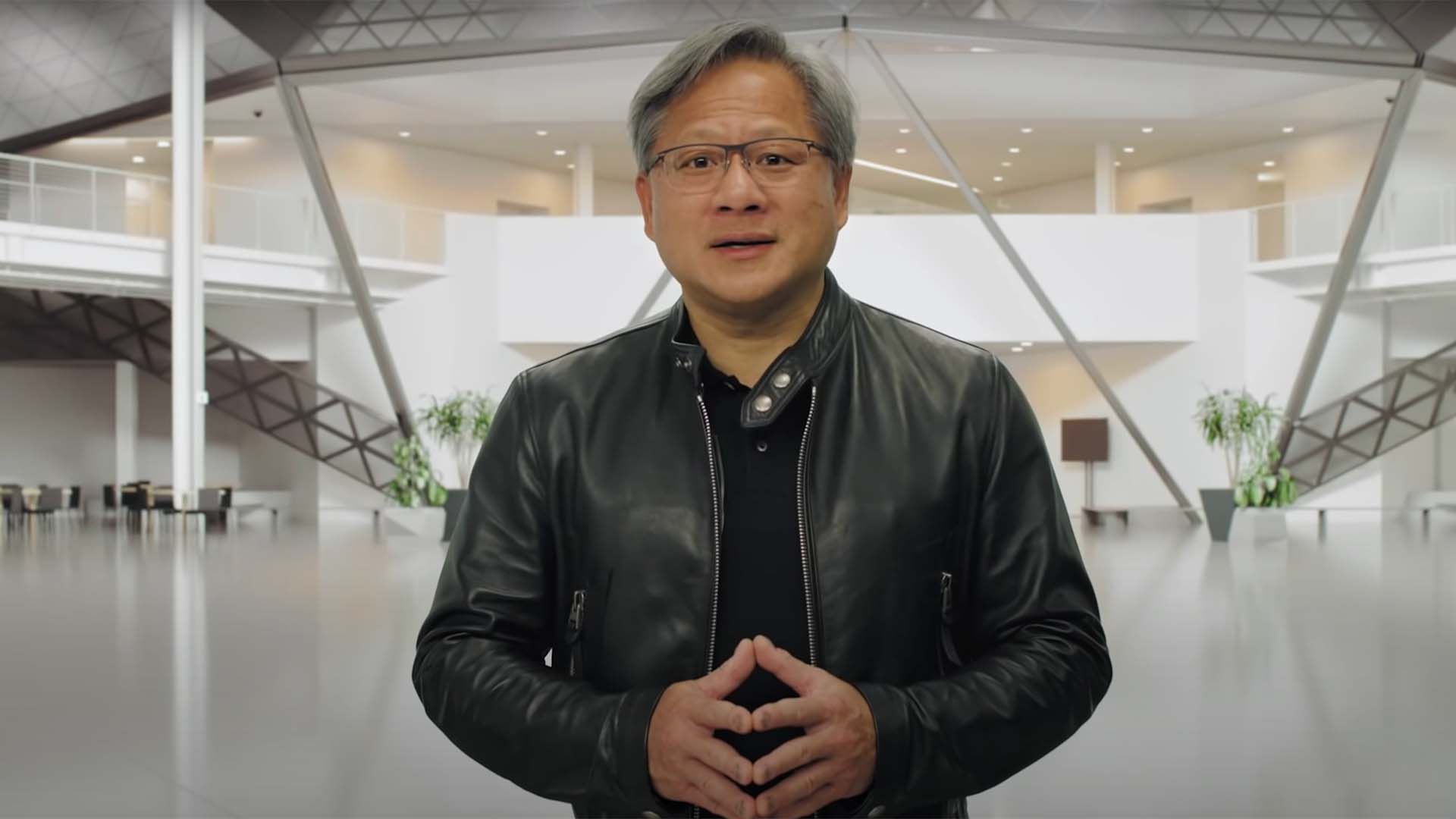

While most of us think of nVidia as a company that makes GPUs for gaming systems and workstations, the sheer breadth of nVidia's product and software offerings is immense. Jensen Huang opened his GTC keynote late last year with a presentation called, “I Am AI,” showcasing a sampling of the vast constellation of products in the nVidia portfolio, such AI driven translation, climate modeling, pollution monitoring, and climate modeling, with a background beautiful imagery courtesy of nVidia's creative team and technology... and including a score composed by an AI running on nVidia hardware.

One of the most common complaints about nVidia's successive generations of GPUs has been its increasing power envelopes – according to rumor, the Ada Lovelace family of GPUs will top at at nearly 400 watts, which is simply insane.

However, in the context of datacenters and massive scientific simulations running on Exascale supercomputing clusters, the story looks very different, because a single next generation datacenter GPU is able to replace 30 entire dedicated workstations. So while the power consumption of an individual GPU is increasing, their computing power is increasing by a much larger margin, allowing a datacenter to save huge amounts of energy.

One of the barriers in adapting code to run on supercomputers is the coding overhead of scaling up a program designed to run on a single machine to run on a cluster. A developer needs to design the software so that it can split up meaningful and independent units of work, then write the code to split those units of work up, send them to each compute node to execute, gather the results back up, and then combine them.

There are programming toolkits that help with this, like Microsoft's Asynchronous Workflows that are part of the .NET platform, and Google's Map-Reduce idea (itself a toolkit that simplifies what used to be referred to as “Scatter-Gather”), now available in the open source Hadoop toolkit from the Apache Project.

The new cuNumeric library that nVidia is introducing is simply a new Python library built atop a software platform called Legion. Legion treats a supercomputing cluster like a single, giant processors, extracting units of work from existing code and automatically distributing them across the CPU and GPUs in the entire cluster. Jensen says that cuNumeric is able to scale up to 1000 compute nodes with 80% efficiency. While that might sound it's leaving a lot on the table, the best hand-optimized distributed code rarely reaches let alone exceeds 90% efficiency.

And that was just the start. nVidia has also been busy working toward a goal of increasing computing power by a factor of 1 million. The massive new GPUs are the building blocks, and what nVidia refers to as the most advanced network device in the industry along with the fastest NICs available are the nervous system. (You can probably see where this is going at this point....)

For a human to exploit the enormous amount of computing power available would be daunting. So nVidia is developing a deep learning based system that will help humans code for that massive scale – according to Jensen Huang, 5000x better performance than human optimized code.

The aggregate total performance boost that he promised, by increasing raw computing power in a single GPU, scaling them up with massive parallelism, and optimizing with deep learning software, totals 250 million times more performance than previous generation platforms.

What's all this being used for?

Several scientific research teams have been training AI models to understand physics, and then use those AI models to simulate physics. Medical researchers taught their AI models molecular physics and then gave those models amino acid sequences and let the models help them decipher the structures of tens of thousands of proteins. Some researchers have used these models to create detailed simulations of protein interaction, creating in three hours with a single GPU what would previously have required a supercomputer three months.

Jensen Huang described a new nVidia technology called Modulus, used to build physicsML models that can represent physical systems like... the world. A supercomputer big enough to model the entire planet doesn't exist yet, but nVidia wants to change that.

This is where the Game Technology Conference keynote comes to game technology. All of that incredibly advanced networking and computing technology and sophisticated, machine learning based software can simulate Earth... or a virtual Earth. The same technology that nVidia is using to enable climate scientists to model the effects of climate change thirty years into the future is also the platform up on which game developers can create living, breathing, physically based virtual, persistent worlds.

Since Omniverse is based on Universal Scene Description (USD), any development team that wants to can build an Omniverse connector. The existing constellation of Omniverse connectors reads like a who's who of 3D animation, including the industry titans that includes Houdini, Blender, ZBrush, Cinema4D, and Maya.

Building virtual worlds with Omniverse

Siemens is working with nVidia to build a physicsML based simulation of their grid-scale steam-driven energy production facilities to model the deterioration to the facility in order to preemptively address problems before they cause down time. BMW constructed virtual factory and used it to train its robots with new skills used to build real cars. Meanwhile, Ericsson has developed a digital twin of an entire city down to the vegetation and building materials in order to visualize and optimize its 5G network hardware deployment. Repurposing the GPU's raytracing hardware, Ericsson is able to model the way that network signals travel throughout the city so that it can optimize its wireless network.

One interesting system that nVidia showcased is its Triton distributed inference engine that allows nearly realtime inference, and when teamed with nVidia's self-supervised training, can train massive neural nets that require parameter sets far beyond what humans can provide and manage on their own. These inference engines can make nearly realtime decisions based on vast quantities of data, far exceeding what any human could process.

And Triton leads to robotics, where nVidia has developed a next generation processor called Orin. It incorporates a massive amount of GPU and tensor computing power as well as an enormous amount of bandwidth that allows it to ingest vast quantities of data from sensors. And it's possible to supplement it with an Ampere GPU, providing an AI that, in partnership with Mercedes, is able to drive a car on a highway, merging with traffic, stopping to let pedestrians cross in front of it, and basically acting like a robotic chauffeur.

Jensen Huang's final announcement is that nVidia is building Earth2, a global scale simulation of the planet. It will showcase the sheer power of nVidia's ability to train an AI model to understand physics and feed it enough data to create a simulation of entire planet's future in the hopes that visualizing our future will be incentive enough to convince the world as a whole to unify in dealing with climate change.

While focused on massive datacenter scale products and robotics, the GTC presentation also provides a taste of what's coming for gaming and content creation. While the flagship of Omniverse is to simulate earth, there will almost certainly be games developed using the Omniverse platform to provide the most detailed and interactive persistent worlds ever developed.

As exciting as Omniverse is, the fact that nVidia is developing artificial intelligence that is so advanced that it requires another artificial intelligence to train it is simply astonishing. It shows how far nVidia has pushed AI technology, and how high its ambitions are to continue advancing AI technology more and more.

Our analysis of Nvidia's CES2022 keynote to follow.

Tags: Technology GPUs Omniverse

Comments