Quantum computing might benefit us... some time in the future. Any moment now. Annny moment...

There are currently two things to know about quantum computing, at least if you're not a post-doctoral researcher in the field. The first is that the current buzz around the subject, while the stuff of venture capitalists' dreams, is almost certainly decades too soon. The second is that the inner workings are more or less defined by being impossible to explain by analogy to more familiar ideas.

First things first: despite breathless news stories about an apparently-imminent "quantum apocalypse," there is no immediate prospect of quantum computers being able to invalidate the sort of encryption that's widely used on the internet. Certainly, defeating something like the RSA encryption which makes the little padlock in your browser appear requires the ability to factor very large numbers quickly, and that is something that can potentially be done using a quantum computer.

It's worth pointing out that this task is actually one of only a very few that has been demonstrated on actual quantum computer hardware, and even then, the only factors that have been worked out are those of the two-digit number 15 (being 3 and 5) as opposed to the 155 random digits of even the weakest, 512-bit RSA key. The bits in quantum computers – qubits – have very different capabilities than those in conventional computers, but to do any serious work still requires a reasonably large collection of them. Current devices have somewhere between a handful and a few dozen (7 in an IBM experiment in 2001; 54 in Google's Sycamore, from 2019; IBM has promoted a 127-qubit machine), hence the celebrations over working out that 15 = 3 × 5.

Quantum computers aren't necessarily faster

Quantum computers are also not necessarily faster than classical computers for all tasks (current quantum computers are technology demonstrators that generally aren't faster than classical computers for almost any task). Each operation may take considerably more time than a single operation on a classical computer. The (promised, future) benefit of a quantum computer is that that single, comparatively slow operation may do a lot more work, such as - in theory - factoring enormous numbers, simulating large molecules, searching big databases, and related tasks. Still, although a quantum computer may be Turing-complete and therefore capable of doing everything a classical computer can do, it is not expected to be faster at all those tasks.

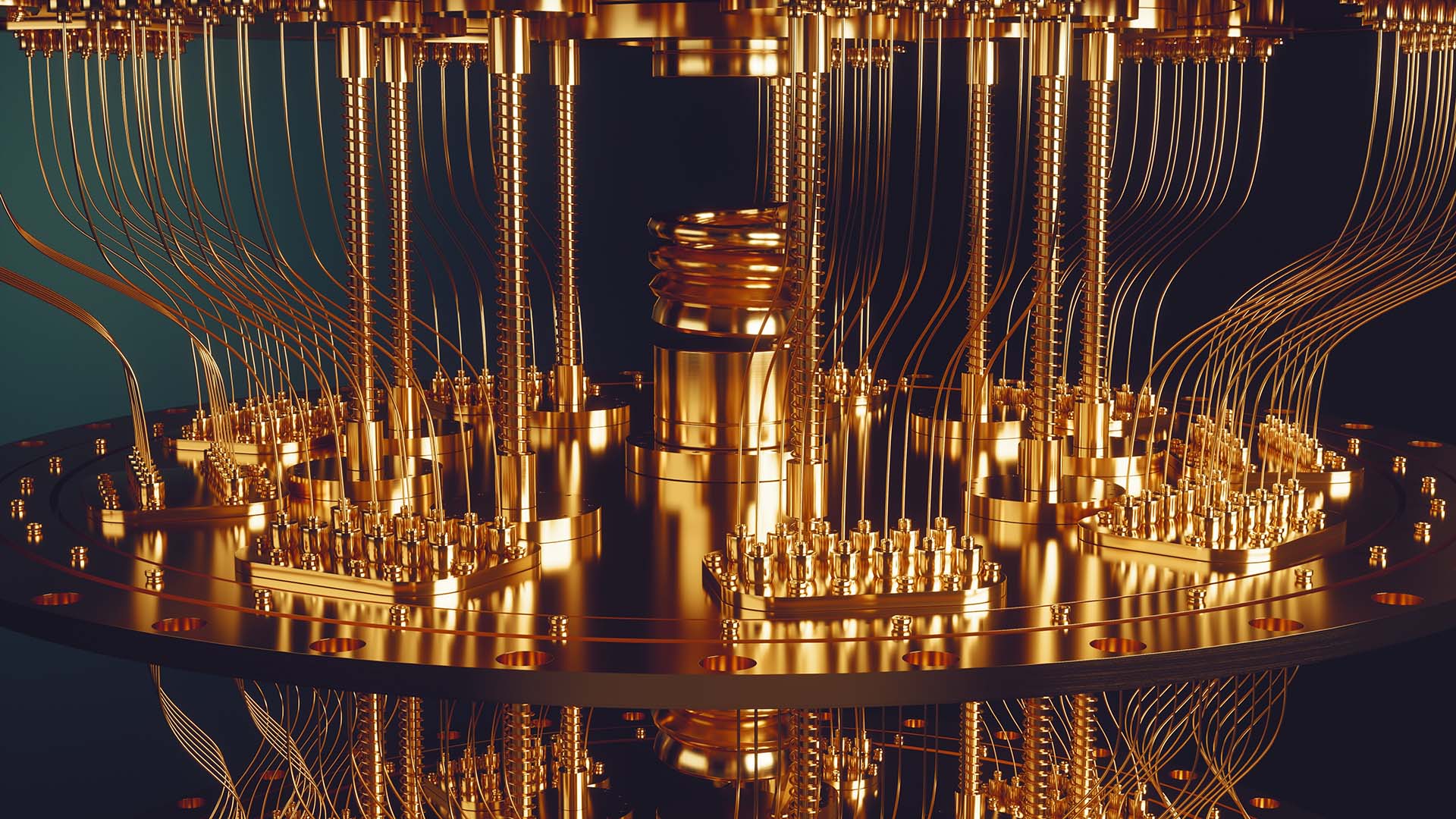

Actually constructing qubits - without getting into the impenetrable depths of entanglement, interference and superposition - is done in a few ways. They can be made using photons which can be polarised in various ways, or electrons which can have up or down spin, and those states can be controlled by bursts of microwaves at the right frequencies. All current approaches require hugely elaborate support, often involving some of the most advanced refrigeration technology we know how to create, achieving temperatures in the millikelvins.

Alongside that, we need the hardware required to inject microwave signals at frequencies relevant to the target particle - often tens of gigahertz. A small handful of those qubits is generally represented by a device the size of an oil drum accompanied by a labful of support gear. You can get access to some of them online, and marvel at the way they don't always quite work as they should, because quantum computer designs we have at the moment suffer some quite significant instability.

That's for two reasons. First, in many applications they effectively operate by running calculations repeatedly on different but equivalent data sets and thus working out what the probability of a particular result is, and increasing the certainty of that assessment over time. Second, frankly, they're glitchy. The state of subatomic particles used to represent states in quantum computers is only (even slightly) stable at very low temperatures, and since absolute zero is impossible to achieve, there's a lower bound on stability. Developing error checking to detect failures caused by this is a hot topic in current research and anticipated to be a significant limiting factor for some time.

Given all this, it's easy to get the impression that quantum computing is in more or less the same state that microelectronics was when the bipolar junction transistor was invented, in the mid-twentieth century. The literature then discussed individual transistors and the mechanics of making and using them; now we have billions of transistors built into something we consider a single device. Much as it's taken decades to get from there to here, it seems likely that we'll need decades to get quantum computers to a state that can actually threaten RSA encryption. And even then, there are quantum-computer-proof alternatives.

None of this is to say that quantum computing it isn't a fascinating field - it's just a very difficult technology that's decades from mature. There's some disquiet within academia that the rush to venture capitalise quantum computing may actually be giving the field something of a bad name, and that's not great because it presents some genuinely impressive possibilities. Many of those possibilities can be tried right now using online tools such as IBM's, although much of what it does is emulated by a conventional computer set up to approximate what a quantum one might do. If it strikes you that doing research on quantum computers using a emulation in conventional ones is evidence of a lack of maturity from the quantum machines... well, it's hard to argue.

So that's the reality check. Beware Silicon Valley startups bearing qubits.

Comments