Not long ago, we reported here about AMD's conservative approach to GPUs; we predicted that not until Navi would AMD be able to compete with Nvidia. In February, AMD surprised everyone by announcing the Radeon VII at $700, billing it as a GPU that could enable 4K gaming. Were we wrong? Or has AMD been running a skunkworks program behind the scenes?

The answer is neither; AMD has been making small volumes of high profit margin GPUs designed around HPC (High Performance Computing, aka super computing) applications. These GPUs are very biased toward straight up number crunching, and not at all on the latest graphics features like real-time ray tracing (RTX), Deep Learning Super Sampling (DLSS) for noise reduction, and hardware video encoding and decoding.

As a result, Nvidia is leading the pack in gaming. Gamers in general are quite happy with RTX for the improvement it enables for image quality, while DLSS is ruining the benefits by softening the image too much; most gamers these days are disabling DLSS and lowering the resolution slightly to keep the frame rates up with ray tracing enabled.

The Radeon VII on the other hand has none of that. Its video encoders haven't kept up, it doesn't have hardware ray tracing, and no tensor cores.

First off, AMD was able to launch this so quickly because of two things.

Design adaption

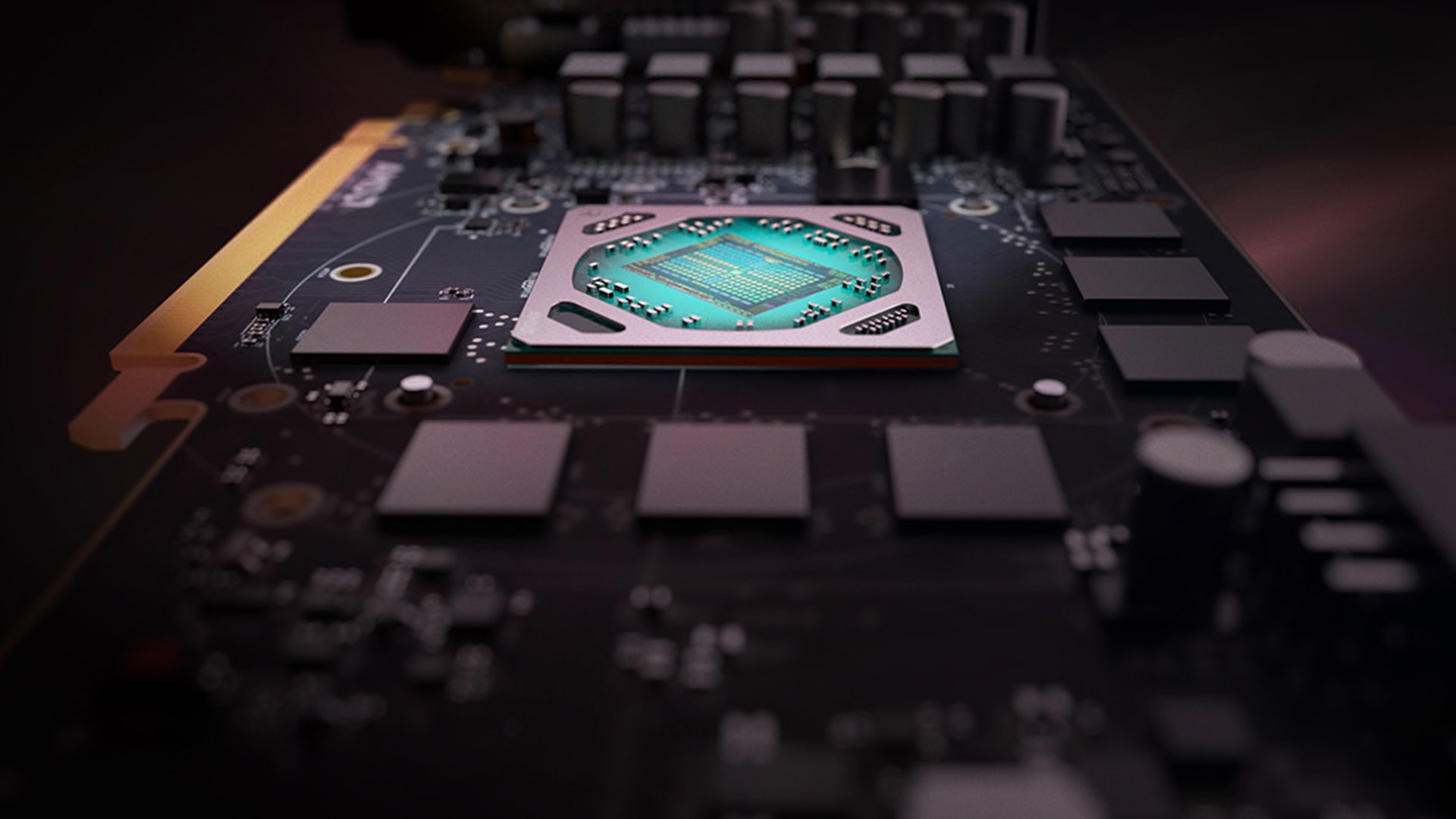

One, it's not a new design; rather it's an adaptation of the Instinct MI50.

Two, AMD is working with TSMC's new 7nm process.

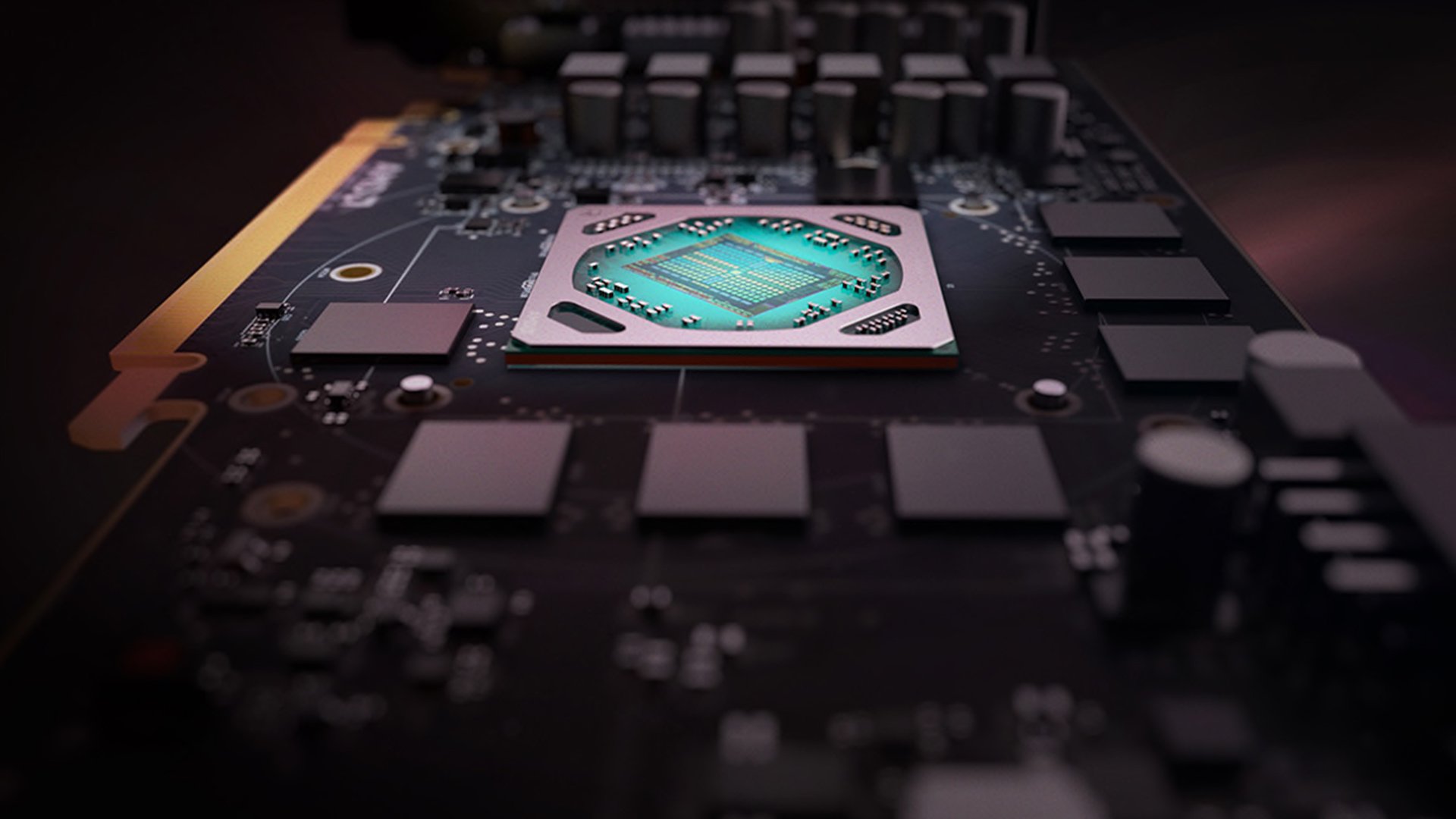

AMD's hope is that it can make up for the feature deficit with brute force computing power combined with a huge 16GB frame buffer made with four banks of High Bandwidth Memory (HBM) version 2, with an aggregate bandwidth of a staggering 1024 GB/s. Yes, that's a full terabyte per second, not a number one would normally equate with consumer products... because it's not a consumer product by design.

The peak computing throughput of this new GPU is 3.5 teraflops for 64 bit arithmetic... but nearly 14 teraflops for 32-bit, and 28 for 16-bit.

Those of us who are using GPUs for software like Resolve aren't generally concerned with the 64-bit performance; we're mostly interested in the 16 and 32-bit throughput, which shows the Radeon VII in a decidedly different light.

On my AlienWare M15 with its hexacore i7 and 32 GB of memory, I'm able to get smooth playback with the de-Bayer resolution of 8K Red footage set to half resolution, or 6K. That's pretty good for compact laptop, especially since the RED decode isn't using the GPU for wavelet decompression yet. It will come first to Nvidia cards because it has been working closely with Red to enable it, but RED plans to port the optimizations to OpenCL as well once the CUDA version is complete.

Real world performance

While doing some compositing for a short film shot in 8K, I set up a Fusion composition with three 8K source clips. With the built in nVidia GPU and its 8GB of memory, I have to reduce the de-Bayer resolution to ¼ in order to render this short; any higher and the GPU runs out of memory and crashes the render.

With the Radeon VII even with the de-Bayer resolution set to full, it's able to handle the render without any dropped frames or GPU memory overruns even at full resolution de-Bayer.

It was a clever move by AMD; take an HPC optimized GPU that normally sells for approximately $3000 and sell a consumer oriented version for $700. The sheer size of its memory buffer makes it a great value for image processing applications like compositing and color grading.

And for those who work with 3D animation software like Cinema4D that incorporate AMD's ProRender rendering engine, it's also a great choice; ProRender is extremely fast with the Radeon VII.

Though still trailing Nvidia, the Radeon VII is hopefully a sign of what we can expect when AMD launches the highly anticipated Navi GPUs later this year.

Tags: Technology

Comments