One of the consequences of the massive R&D spend on the computer in your pocket is that new technology is appearing there first — and that includes some tools and techniques that we’d like to see on our film and video cameras.

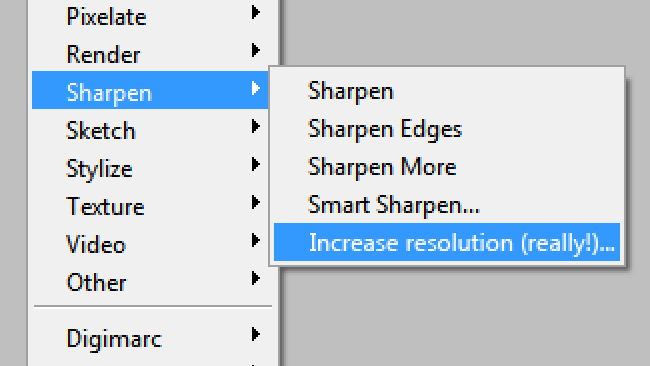

It wasn't long ago that increasing the “sharpness” control on a camcorder did nothing but introduce a bunch of unpleasant edge enhancement, the video equivalent of pushing up the high frequencies on a cassette player in a desperate attempt to improve the way it sounded. With sound, all that really did was to increase the noise level, and it did much the same thing with video, too. This sort of disappointment has led to an ostensibly quite reasonable reticence, in the minds of technical people, to accept that post production processing can genuinely make technical improvements to pictures.

Background blur rendered by the iPhone 7 is Gaussian in character and doesn't betray much personality in its bokeh (courtesy Apple)

Grading is another issue — that's subjective. To date, though, we've perhaps tolerated Unsharp Mask filtering as a way to improve the apparent sharpness of images, perhaps writing it off as just a matter of aperture correction, but in general, there's a degree of grumpiness that goes along with any attempt to add information that wasn't already there.

And to be fair, that is genuinely impossible in terms of formal information theory. What allows the human brain to look at a soft picture and tell that it's soft is that we aren't solely relying on the information in the picture: we're relying on a lifetime's experience of how vision works. We know that a human hair is not a soft-edged object, and if one is depicted with a soft edge, we know that's an artefact of the camera and not the hair.

Given that it's at least theoretically possible to give that information to a computer, too, then advanced tricks such as post production refocussing of soft images are not impossible. Extremely difficult, perhaps utterly impractical, if we want to suppose that we should teach a computer what a face looks like in order that it can refocus that face accurately enough to avoid chatter in full-motion video. That's very difficult. It's not, theoretically and formally, impossible. This is, in general, roughly what computational photography seeks to achieve – the application of outside information about a scene to some desirable end.

The pace of innovation

Perhaps unsurprisingly, the frantic pace of smartphone innovation has given us some of the first consumer-facing applications. Google released an app for Android phones a couple of years ago which allowed users to produce a moderately accurate simulation of shallow depth of field by sliding the camera upward for a few seconds during the process of taking the shot.

This naturally only works well on static subjects, and the app seems to have vanished since, but it's a great example. The phone is, in this case, estimating the depth of objects in the scene using their offset as viewed from various different angles. Whether we call this stereoscopy or a one-dimensional lightfield (possibly the latter is more accurate), it's using extra information about a scene to do something to that scene.

The Nokia Ozo 360-degree camera. Processing the multiple images into a single, artifact-free spherical image involves significant computation effort

Apple's implementation of a similar background-blur effect on the iPhone 7 has been described in rather different terms. Phrases like “machine learning” are thrown around a lot, with the implication that the phone knows enough about human beings to produce depth maps of them. The uneasy creaking noise associated with these claims is the sound of stretched credibility, and there is a brief mention of the phone using both of its cameras simultaneously to help out. The interocular offset between them would be tiny by the standards of conventional stereography, and the depth signal therefore subject to considerable noise on typical scenes, but it is possible.

The proof of this would be to try the feature out with a non-human subject, but either way it's probably safe to assume, despite the increasing excellence of smartphone cameras, that the need for proper stills cameras with large sensors isn't going anywhere.

Computational photography in your pocket...and on your shoulder?

Fraunhofer's work with lightfield technology is a prominent example of computational photography

This sort of thing is almost inevitably coming to film and television cameras too. The work done on lightfield cameras by Fraunhofer is in broadly the same vein as Google's idea of sliding cameras around, except that with a whole array of cameras, we can get information about what the scene looks like from multiple angles at once. Depth mapping, something adjacent to true 3D stabilisation, and stereoscopy can all be extracted from the information recorded. There is a degree of approximation involved, since these techniques rely on detecting the same object in the images of two adjacent cameras and calculating its distance based on the apparent offset between the two. At long distances, this becomes a very small offset defined by slight variations in the brightness of a pixel, and it is naturally subject to noise.

Making this work in the confines of a research lab with access to high-quality cameras is one thing. Doing it on a pocket-sized, battery-powered, very affordable piece of consumer electronics is quite another, in terms of both the quality of images required and the computing resources needed to execute most types of computational photography.

Google's Pixel has a 12.3 megapixel camera, but we're all aware that numbers like that mean very little on their own. Perhaps the fact that the sensor is (in the rather confusing nomenclature of obsolete video camera tubes) a 1/3.2” device is more illuminating. That's rather large for a smartphone sensor. It's certainly slightly, though not meaningfully, larger than that on the iPhone 7, which has a fractionally, though not meaningfully, lower resolution. It's incredibly impressive that the pictures created by incredibly low-cost sensors like this are good enough to use for this sort of magic trickery.

Decades ago, the military always got the best technology first, because they had funding motivated by cold-war paranoia. Even now, we might still expect to see the cleverest tricks appear on high-end professional gear first. What's actually happening is that the sheer scale of R&D on consumer pocket technology is impossible to keep up with, and even advanced techniques such as computational photography are starting to appear there first. That's great, but it can be a bit frustrating. The Google Pixel phone uses its accelerometer to detect camera motion and implement rolling shutter correction. Isn't that feature we'd all like to see on movie cameras, too?

Tags: Technology

Comments